DiaMT: Dialogue in Machine Translation

Rachel Bawden, LIMSI, CNRS, Univ. Paris-Sud, Université Paris-Saclay

Tag Questions in Machine Translation

Research paper:

Machine Translation, it's a question of style, innit? The case of English tag questions

Rachel Bawden (2017)

In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing (EMNLP'17)

Minor improvements in the extraction process may lead to slightly different results from the paper

Create the Tag Question Corpus Annotations

Data preparation

- Download OpenSubtitles raw data:

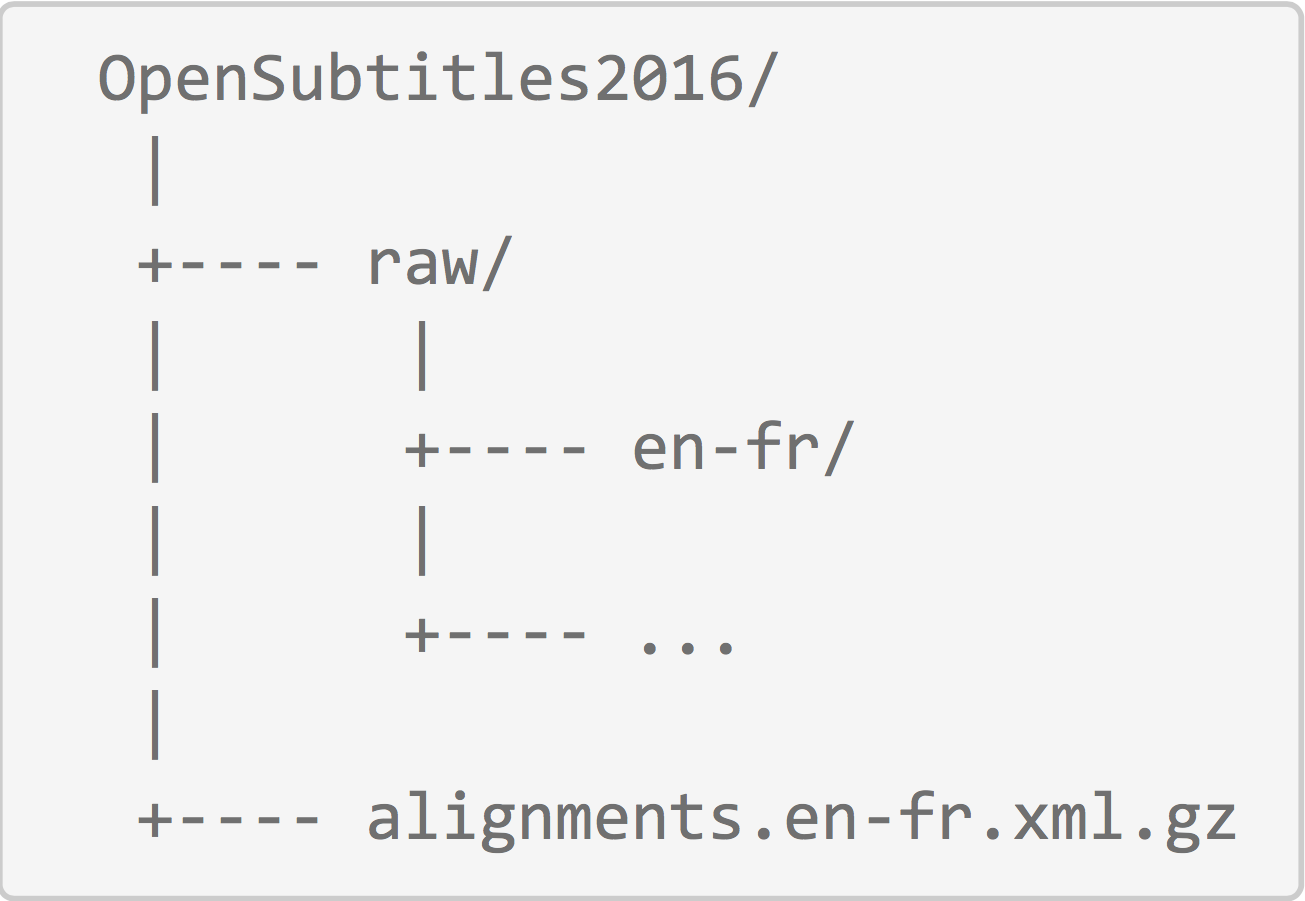

- raw data for the language pair (separate files) from Opus - untokenised corpus files

- the sentence alignment file for the language pair, named alignments.src-trg.xml.gz (to be placed alongside the 'raw' directory)

- Install pre-processing tools

- MElt tokeniser/tagger

- mosesdecoder (to be used for truecasing)

- Change the paths in datasets/generic-makefile to correspond to your own tool installation and working directory paths

- OSDIR=/path/to/OpenSubtitles2016/raw

- TRUECASINGDIR=/path/to/mosesdecoder/scripts/recaser

- MAINDIR=path/to/tag-questions-opensubs

- Prepare true-casing data

- concatenate Europarl and Ted-talks data (full monolingual datasets) where available for the language

- replace by space

- tokenise with MElt:

MElt -l {en, fr, de, cs} -t -x -no_s -M -K - change the path in the language-specific Makefile to the pre-processed data used for truecasing

- Extract parallel corpus from OpenSubtitles2016

- cd datasets/langpair (e.g. de-en, fr-en, cs-en)

- make extract

- Pre-process data

- cd datasets/langpair (e.g. de-en, fr-en, cs-en)

- change the path in the language-specific Makefile. Truecasing data must be a single file for each language, tokenised (with MElt) and cleaned using the clean_subs.py script)

- Preprocessing (cleaning, blank line removal, tokenisation, truecasing and division into sets):

make preprocess

Annotate tag questions

- cd subcorpora/langpair

- make annotate (to get line number of each type of tag question)

- make getsentences (to extract the sentences corresponding to the line numbers)

Translate sentences

- Store all translations in translations/langpair and give them the name testset.translated.{cs,de}-en, where testset is trainsmall, devsmall or testsmall

- Czech and German to English translation (Nematus):

- Download Czech and German to English systems from here (WMT'16 UEdin submissions - Sennrich et al., 2016)

- Decode trainsmall, devsmall and testsmall sets using the translation scripts provided via the link just above

- French-English translation (Moses model)

- Select 3M random sentences from the train dataset for training and 2k different random sentences from the same train set for tuning.

- Data cleaned with MosesCleaner, duplicates removed

- 3 4-gram language models trained using KenLM on (i) Europarl, (ii) Ted-talks (when available), (iii) train set of OpenSubtitles2016

- Symmetrised alignments, tuned with Kbmira

- Tokenise all translations:

MElt -l en -t -x -no_s -M -K and name as testset.translated.melttok.{cs,de}-en

Tag Question classification

- Save lexica for Czech and German to resources/ folder (lexicon-cs.ftl.gz and lexicon-de.ftl.gz). For

now: contact me for lexica (links up shortly)

- MorfFlex. Jan Hajič and Jaroslava Hlaváčová. 2013.

- DeLex, a freely-avaible, large-scale and linguistically grounded morphological lexicon for German. Benoît Sagot. 2014. In Proceedings of the Language Resources and Evaluation Conference (LREC’14).

- Update paths in classify_lang.sh

- cd classify/lang_pair

- bash classify_lang.sh